AI Risk Assessment Framework 2025: Step-by-Step Implementation Guide

As AI systems become integral to business operations, understanding and managing AI-specific risks is critical for organizational success and compliance. The NIST AI Risk Management Framework provides a structured approach, but many organizations struggle with practical implementation. This comprehensive guide walks through building an AI risk assessment program from scratch, with actionable methodologies, templates, and real-world examples.

Why AI Risk Assessment Is Different

Traditional risk assessment frameworks don't adequately address AI-specific challenges:

Unique AI Risk Characteristics

- Non-Deterministic Behavior - AI systems produce variable outputs, making risk prediction complex

- Emergent Risks - Unintended capabilities and behaviors not present during development

- Data Dependencies - Risks from training data quality, bias, and poisoning

- Model Drift - Performance degradation over time as real-world data shifts

- Opacity - Black-box nature makes root cause analysis difficult

- Cascading Impacts - AI decisions affect downstream systems and stakeholders

The NIST AI RMF: Foundation for Risk Assessment

The NIST AI Risk Management Framework, released in January 2023, provides voluntary guidance for managing AI risks. It's designed to be flexible, rights-preserving, and applicable across sectors.

Core Functions Overview

1. GOVERN

Establish culture, policies, and processes for AI risk management throughout the organization.

- Create AI governance structure with clear roles and responsibilities

- Define risk tolerance and appetite

- Establish policies covering AI development, deployment, and monitoring

- Integrate AI risks into enterprise risk management

2. MAP

Understand the context in which AI systems operate and identify potential risks.

- Document AI system purpose, capabilities, and limitations

- Identify stakeholders and their interests

- Map data sources, processing, and outputs

- Recognize potential harms and negative impacts

3. MEASURE

Quantify and assess identified risks using appropriate methodologies and metrics.

- Evaluate technical performance (accuracy, robustness, fairness)

- Assess security vulnerabilities

- Measure bias and fairness across subgroups

- Test for adversarial robustness

4. MANAGE

Implement strategies to mitigate risks and monitor effectiveness over time.

- Prioritize risks based on severity and likelihood

- Deploy technical and procedural controls

- Monitor AI systems continuously

- Update risk assessments as systems evolve

💡 Key Principle

NIST AI RMF emphasizes that GOVERN is ongoing and intersects with all other functions. Risk management isn't a one-time activity but a continuous process embedded in organizational culture.

Step 1: Establish AI Governance Foundation (GOVERN)

Define Governance Structure

Roles and Responsibilities

AI Governance Structure:

Executive Level:

├── AI Steering Committee

│ └── Executives from IT, Legal, Compliance, Product

│ └── Meets quarterly to review AI strategy and risks

Management Level:

├── AI Risk Manager

│ └── Owns AI risk assessment program

│ └── Reports to CISO and CRO

├── AI Ethics Officer

│ └── Ensures responsible AI practices

│ └── Reviews high-risk AI deployments

Operational Level:

├── AI Development Teams

│ └── Implement technical controls

│ └── Document systems and conduct testing

├── Model Validators

│ └── Independent testing and validation

│ └── Sign-off on production deploymentsCreate Risk Appetite Statement

Example AI Risk Appetite Statement:

[Organization Name] maintains a conservative risk appetite

for AI systems with the following guidelines:

1. ZERO TOLERANCE risks:

- Discrimination violating civil rights laws

- Unauthorized access to customer PII

- Safety-critical failures (medical, autonomous vehicles)

2. LOW APPETITE risks:

- Reputation damage from AI errors

- Regulatory non-compliance

- Significant bias in decision-making

3. MODERATE APPETITE risks:

- Performance degradation (>10% accuracy loss)

- User experience friction from safety controls

- Development velocity reduction for compliance

4. ACCEPTABLE risks:

- Minor performance variations

- Edge case failures with minimal impact

- Non-critical feature delays for securityDevelop AI Policies

Essential AI Policies:

- AI Development Policy - Standards for model training, testing, documentation

- AI Deployment Policy - Requirements before production release

- AI Monitoring Policy - Continuous performance and safety monitoring

- Data Governance Policy - Training data sourcing, quality, privacy

- Incident Response Policy - Procedures for AI failures and compromises

- Third-Party AI Policy - Vendor assessment and approval process

Step 2: System Context and Risk Identification (MAP)

AI System Documentation Template

AI SYSTEM PROFILE

1. SYSTEM IDENTIFICATION

Name: [System Name]

Version: [Version Number]

Owner: [Team/Department]

Criticality: [Low/Medium/High/Critical]

2. PURPOSE AND SCOPE

Business Problem: [What problem does this solve?]

Intended Use: [How should it be used?]

Out-of-Scope Uses: [Explicitly prohibited uses]

User Population: [Who interacts with this system?]

3. TECHNICAL ARCHITECTURE

Model Type: [LLM, Computer Vision, etc.]

Base Model: [e.g., GPT-4, Custom CNN]

Training Data: [Source and characteristics]

Input Data: [What data does it process?]

Output: [What decisions/recommendations?]

Integration Points: [Connected systems]

4. STAKEHOLDER ANALYSIS

Primary Users: [Direct users]

Affected Parties: [Indirectly impacted]

Decision Authority: [Who can override AI?]

Accountability: [Who is responsible for outcomes?]

5. POTENTIAL HARMS

Technical Failures: [System errors, outages]

Bias/Fairness: [Discriminatory outcomes]

Privacy: [Data exposure risks]

Security: [Attack vectors]

Societal: [Broader ethical concerns]Risk Identification Methodology

1. Threat Modeling for AI Systems

AI Threat Model Components:

STRIDE Framework Adapted for AI:

├── Spoofing

│ └── Model impersonation, API key theft

├── Tampering

│ └── Training data poisoning, model backdoors

├── Repudiation

│ └── Lack of audit trails for AI decisions

├── Information Disclosure

│ └── Training data extraction, embedding inversion

├── Denial of Service

│ └── Resource exhaustion, API flooding

├── Elevation of Privilege

│ └── Prompt injection, jailbreaking

Additional AI-Specific Threats:

├── Model Drift

├── Bias Amplification

├── Adversarial Attacks

├── Unintended Capabilities

└── Cascading Failures

2. Risk Catalog Development

Common AI Risk Categories:

| Risk Category | Examples |

|---|---|

| Performance Risks | Low accuracy, model drift, edge case failures |

| Bias & Fairness | Discriminatory outcomes, disparate impact |

| Security Risks | Prompt injection, data poisoning, model theft |

| Privacy Risks | PII exposure, training data memorization |

| Compliance Risks | Regulatory violations, audit failures |

| Operational Risks | Vendor dependencies, skill gaps, costs |

| Reputation Risks | Public AI failures, ethical controversies |

Step 3: Risk Analysis and Measurement (MEASURE)

Risk Scoring Methodology

Likelihood Assessment

| Rating | Description | Frequency |

|---|---|---|

| 1 - Rare | May occur in exceptional circumstances | < 1% annual probability |

| 2 - Unlikely | Could occur but not expected | 1-10% annual probability |

| 3 - Possible | Might occur at some time | 10-50% annual probability |

| 4 - Likely | Will probably occur in most circumstances | 50-90% annual probability |

| 5 - Almost Certain | Expected to occur in most circumstances | > 90% annual probability |

Impact Assessment

| Rating | Financial | Operational | Reputation |

|---|---|---|---|

| 1 - Minor | < $10K | Minimal disruption | Limited internal |

| 2 - Moderate | $10K-$100K | Hours of downtime | Local media attention |

| 3 - Major | $100K-$1M | Days of disruption | Regional coverage |

| 4 - Severe | $1M-$10M | Weeks of impact | National attention |

| 5 - Critical | > $10M | Business-threatening | International crisis |

Risk Matrix

Risk Score = Likelihood × Impact

Risk Heat Map:

IMPACT

1 2 3 4 5

┌────┬────┬────┬────┬────┐

5 │ 5 │ 10 │ 15 │ 20 │ 25 │

├────┼────┼────┼────┼────┤

L 4 │ 4 │ 8 │ 12 │ 16 │ 20 │

I ├────┼────┼────┼────┼────┤

K 3 │ 3 │ 6 │ 9 │ 12 │ 15 │

E ├────┼────┼────┼────┼────┤

L 2 │ 2 │ 4 │ 6 │ 8 │ 10 │

I ├────┼────┼────┼────┼────┤

H 1 │ 1 │ 2 │ 3 │ 4 │ 5 │

O └────┴────┴────┴────┴────┘

O

D 🟢 Low (1-5) 🟡 Medium (6-11)

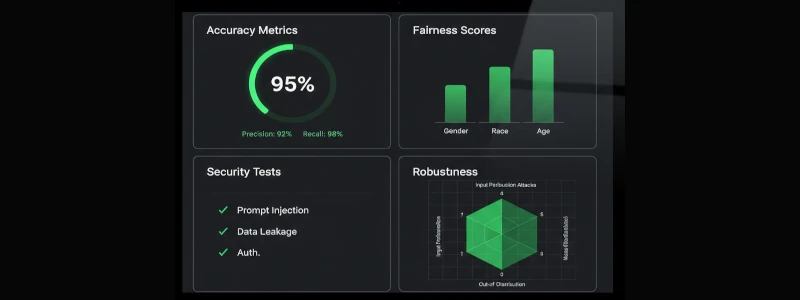

🟠 High (12-15) 🔴 Critical (16-25)Technical Risk Measurement

Performance Testing

def measure_model_risks(model, test_data):

"""

Comprehensive model risk measurement

"""

metrics = {}

# 1. Accuracy and Performance

metrics['accuracy'] = evaluate_accuracy(model, test_data)

metrics['precision'] = calculate_precision(model, test_data)

metrics['recall'] = calculate_recall(model, test_data)

metrics['f1_score'] = calculate_f1(model, test_data)

# 2. Fairness and Bias

demographics = ['gender', 'race', 'age']

metrics['fairness'] = {}

for demo in demographics:

metrics['fairness'][demo] = measure_demographic_parity(

model, test_data, demo

)

# 3. Robustness

metrics['adversarial_robustness'] = test_adversarial_attacks(model)

metrics['distribution_shift'] = test_model_drift(model, test_data)

# 4. Explainability

metrics['feature_importance'] = calculate_shap_values(model)

metrics['prediction_confidence'] = measure_confidence_distribution(model)

# 5. Security

metrics['prompt_injection_resistance'] = test_injection_attacks(model)

metrics['data_leakage'] = test_training_data_extraction(model)

return metrics

Step 4: Risk Prioritization and Treatment (MANAGE)

Risk Register Template

RISK ID: AI-2025-001

SYSTEM: Customer Service Chatbot

RISK CATEGORY: Bias & Fairness

DESCRIPTION:

Model shows 15% lower accuracy for non-native English speakers,

potentially violating equal service requirements.

LIKELIHOOD: 4 (Likely)

IMPACT: 3 (Major - regulatory and reputation risk)

INHERENT RISK SCORE: 12 (High)

CURRENT CONTROLS:

- Human escalation available

- Monthly bias monitoring

- Quarterly model retraining

RESIDUAL RISK SCORE: 9 (Medium)

RISK OWNER: Head of AI Products

DUE DATE: Q1 2026

TREATMENT PLAN:

1. Expand training data with diverse English dialects

2. Implement accent-robust preprocessing

3. Add confidence thresholds for non-native speakers

4. Enhanced monitoring dashboard

TARGET RESIDUAL RISK: 6 (Medium-Low)

BUDGET: $50,000

STATUS: In ProgressRisk Treatment Strategies

1. Avoid

Eliminate the risk by not deploying the AI system or removing risky features.

- Example: Cancel deployment of facial recognition in sensitive contexts

- When to use: Risk exceeds appetite and cannot be adequately mitigated

2. Mitigate

Implement controls to reduce likelihood or impact to acceptable levels.

- Example: Add human review for high-stakes AI decisions

- When to use: Most common strategy for manageable risks

3. Transfer

Share risk with third parties through insurance, contracts, or partnerships.

- Example: Use enterprise API provider with SLA and liability coverage

- When to use: Risks with potential financial impact

4. Accept

Acknowledge risk and proceed with appropriate monitoring.

- Example: Accept minor edge case performance issues

- When to use: Low-priority risks within appetite

Control Implementation

Technical Controls

- Input Validation - Sanitize and validate all inputs

- Output Filtering - Screen AI outputs for harmful content

- Confidence Thresholds - Require human review for low-confidence predictions

- Rate Limiting - Prevent resource exhaustion

- Monitoring & Alerting - Detect anomalies in real-time

- Model Versioning - Enable rollback to safe versions

Procedural Controls

- Change Management - Approval process for model updates

- Incident Response - Procedures for AI failures

- Human Oversight - Regular review of AI decisions

- Training Programs - Educate users and operators

- Third-Party Audits - Independent validation

Step 5: Continuous Monitoring and Review

Key Performance Indicators (KPIs)

AI Risk Management KPIs:

Operational Metrics:

- Model Accuracy: 95.2% (Target: ≥95%)

- Average Response Time: 1.2s (Target: <2s)

- System Uptime: 99.7% (Target: ≥99.5%)

- Error Rate: 0.8% (Target: <1%)

Safety Metrics:

- Bias Score (Demographic Parity): 0.92 (Target: ≥0.90)

- Adversarial Robustness: 87% (Target: ≥85%)

- Content Filtering Rate: 99.5% (Target: ≥99%)

- Human Escalation Rate: 5.2% (Target: <10%)

Governance Metrics:

- Risk Assessments Completed: 12/12 (Target: 100%)

- High Risks with Treatment Plans: 8/8 (Target: 100%)

- Overdue Risk Actions: 1 (Target: 0)

- Model Reviews Completed: 4/4 quarterly (Target: 100%)

Security Metrics:

- Detected Attacks: 23 blocked (Target: 100% blocked)

- Security Incidents: 0 (Target: 0)

- Vulnerability Remediation Time: 5 days (Target: <7 days)Ongoing Risk Review Process

- Daily: Automated monitoring alerts, performance dashboards

- Weekly: Team review of metrics, incident reports

- Monthly: Risk register updates, control effectiveness reviews

- Quarterly: Comprehensive model validation, stakeholder reporting

- Annually: Full risk assessment refresh, program evaluation

Real-World Implementation Example

Case Study: Credit Scoring AI

Initial State (Pre-Assessment)

- AI model deployed without formal risk assessment

- No bias testing conducted

- Limited monitoring in production

- Regulatory concerns from audit team

Risk Assessment Process

- GOVERN: Established AI governance committee, defined risk appetite

- MAP: Documented system, identified stakeholders, recognized bias risks

- MEASURE: Conducted fairness analysis, found 12% disparity across demographics

- MANAGE: Implemented bias mitigation, added human review for edge cases

Results

- Bias reduced from 12% to 3% demographic parity

- Regulatory approval obtained

- Continuous monitoring dashboard deployed

- Quarterly audits established

- Risk score reduced from 16 (Critical) to 6 (Medium)

Common Pitfalls and How to Avoid Them

⚠️ Warning: Common Mistakes

❌ One-time assessment instead of continuous monitoring

❌ Focusing only on technical risks, ignoring ethics/fairness

❌ No executive sponsorship or governance structure

❌ Inadequate documentation of AI systems

❌ Treating AI risks separately from enterprise risk management

❌ Insufficient testing before production deployment

❌ No incident response plan for AI failures

Success Factors

- Executive Buy-In: Secure leadership commitment and resources

- Cross-Functional Teams: Include technical, legal, compliance, ethics perspectives

- Start Small: Pilot with one high-risk system, then scale

- Integrate with Existing Processes: Leverage current risk management frameworks

- Automate Where Possible: Use tools for continuous testing and monitoring

- Document Everything: Maintain comprehensive records for audits

- Regular Training: Educate teams on evolving AI risks

Tools and Resources

Risk Assessment Tools

- AI Fairness 360 (IBM) - Bias detection and mitigation

- Fairlearn (Microsoft) - Fairness assessment toolkit

- AI Risk Management Toolkit (NIST) - Implementation guidance

- OWASP AI Testing Tools - Security vulnerability scanning

- Model Cards Toolkit - Documentation templates

Helpful Resources

- NIST AI RMF Playbook - Detailed implementation guidance

- ISO/IEC 23894 - Risk management guidance for AI

- EU AI Act - Regulatory requirements for high-risk AI

- AI Incident Database - Learn from past AI failures

Conclusion

Implementing an AI risk assessment framework is no longer optional; it's essential for responsible AI deployment and regulatory compliance. Key takeaways:

- Use Structured Frameworks: NIST AI RMF provides proven methodology

- Make It Continuous: Risk management is ongoing, not one-time

- Measure What Matters: Technical performance, fairness, security, and ethics

- Engage Stakeholders: Risk management requires cross-functional collaboration

- Document Rigorously: Comprehensive records support compliance and improvement

Organizations that implement robust AI risk assessment programs will be better positioned to capitalize on AI opportunities while managing downside risks effectively.

Need Help Implementing AI Risk Assessment?

TestMy.AI provides comprehensive AI risk assessment services, helping organizations implement NIST AI RMF and build robust risk management programs.

Schedule a Risk Assessment ConsultationLearn About Our ServicesReferences and Further Reading

- National Institute of Standards and Technology. (2023). "AI Risk Management Framework (AI RMF 1.0)." https://www.nist.gov/itl/ai-risk-management-framework

- NIST. "NIST AI 100-1 Artificial Intelligence Risk Management Framework." https://nvlpubs.nist.gov/nistpubs/ai/nist.ai.100-1.pdf

- Securiti. "NIST AI Risk Management Framework Explained." https://securiti.ai/nist-ai-risk-management-framework/

- OneTrust. "Navigating the NIST AI Risk Management Framework with confidence." https://www.onetrust.com/blog/

- AuditBoard. "Safeguard the Future of AI: The Core Functions of the NIST AI RMF." https://auditboard.com/blog/nist-ai-rmf

- Hyperproof. "NIST AI Risk Management Framework: How to Govern AI Risk." https://hyperproof.io/

- Holistic AI. "The NIST's AI Risk Management Framework Playbook: A Deep Dive." https://www.holisticai.com/blog/